This is the first of a two-part series that showcases step-by-step processes to query data from other sources when you are writing a Log Analytics query.

For this example, we will query data that is stored in Azure blob storage and use that data in a Log Analytics query.

In this example, I will be querying Windows 10 version information which I stored in an Azure blob. The goal of this query was to send me a notification whenever a new version of Windows 10 was identified within any of our customer environments where we gather this data.

When this query was finalized, I moved it into a Logic App which sends me a weekly update for any new versions of Windows 10 which are found “in the wild”. Step-By-Step : The following steps were required to make this happen: create the file, create the storage account, create the container, upload the file to the Azure blob storage, identify the URL, and “secret token” and develop/test the query in Log Analytics.

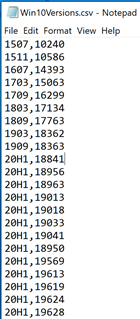

Create the file The easiest way I found to create the file was to generate the required contents in Excel and save the resulting file off as a CSV.

Here is a subset of the content I used: NOTE : The first line does not need to include what the fields are as the query language defines the field names when we access the external data.

1909

18363

20H1

18841

20H1

18956

20H1

18963

20H1

19013

20H1

19018

20H1

19033

Once it has been saved off as a CSV it looks like this in notepad:

Graphic 1: CSV in notepad

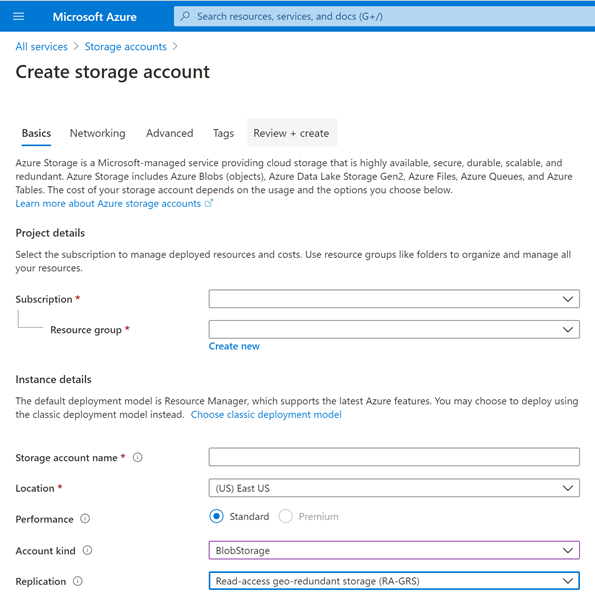

Create a new storage account (if you aren’t using an existing one)

To create the new storage account (if you don’t have one already that you want to use), create a storage account of the account type “BlobStorage”.

To create the storage account, you will need to choose your subscription and resource group as well as choose the storage account name, location, performance, account kind (BlobStorage), and replication.

Graphic 2: Creating a storage account

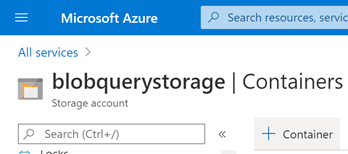

Create a new container (if you aren’t using an existing one) Create a new container (if you aren’t using an existing one) where the file will be stored by clicking on the + Container option within the storage account.

Graphic 3: Adding a container

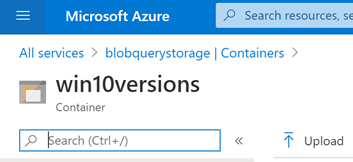

Upload the file to the Azure blob storage Open the container, and us the upload option within the container.

Graphic 5: Uploading into the container

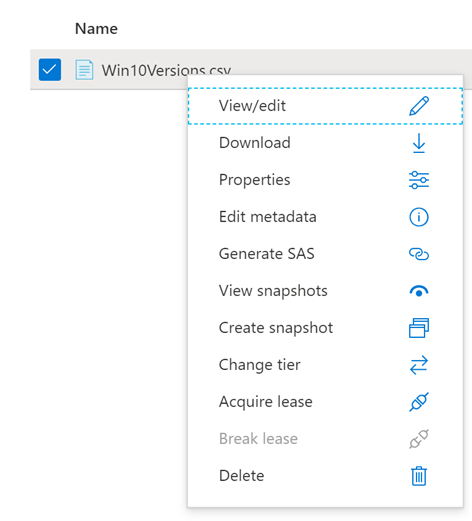

Identify the “secret token” needed to access the blob Now that we have our file in place, we can create the secret token that we will need to access the file contents. To do this we right-click on the file and choose “Generate SAS”.

Graphic 7: Choosing to generate the SAS file the file

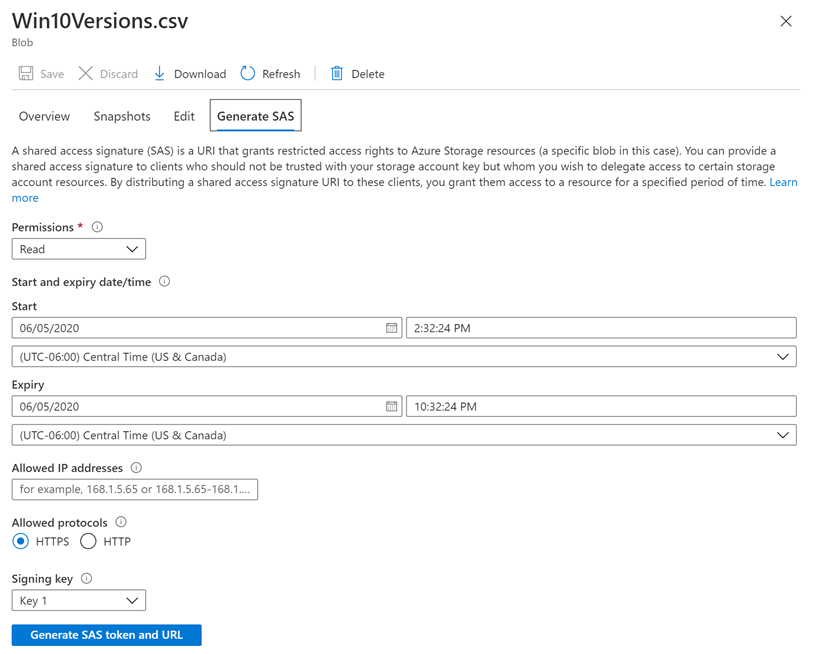

On the Generate SAS page, we can specify what level of permissions are allowed (read for this example), the start and expiration time for the SAS and we can specify the allowed protocols (HTTPS in this example) and the signing key.

Graphic 8: Configuring the shared access signature (SAS)

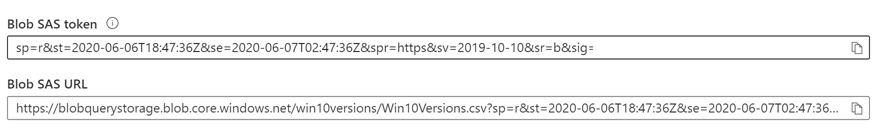

Graphic 9: Copying the Blob SAS token, and Blob SAS URL

Copy both contents somewhere as you will need them when you query the data via Log Analytics.

The only field you will need is the Blob SAS token which has sample contents below:

https://blobquerystorage.blob.core.windows.net/win10versions/Win10Versions.csv?sp=r&st=2020-06-05T19:32:24Z&se=2020-06-06T03:32:24Z&spr=https&sv=2019-10-10&sr=b&sig=dN9x%2FgjQyTv5Fqp%2BGCqEwyGuV7tDKUOW9AgLvPmzzIM%3D

This field needs to be split into two parts. The first up to the name of the file, the second the remainder of the field. An example of the split is shown below.

URL to the file: https://blobquerystorage.blob.core.windows.net/win10versions/Win10Versions.csv

Secret token: ?sp=r&st=2020-06-05T19:32:24Z&se=2020-06-06T03:32:24Z&spr=https&sv=2019-10-10&sr=b&sig=dN9x%2FgjQyTv5Fqp%2BGCqEwyGuV7tDKUOW9AgLvPmzzIM%3D

Develop and test the query to access the contents of the file in blob storage

The next step is to develop and test the query to access the file content we put into blob storage. My sample query is below with the two pieces of data required from above shown in bold as part of the query below.

let OSBuilds =

externaldata (Version:string, Build:string) [

@“ https://blobquerystorage.blob.core.windows.net/win10versions/Win10Versions.csv“

// URL to the file

h?sp=r&st=2020-06-05T19:32:24Z&se=2020-06-06T03:32:24Z&spr=https&sv=2019-10-10&sr=b&sig=dN9x%2FgjQyTv5Fqp%2BGCqEwyGuV7tDKUOW9AgLvPmzzIM%3D “

// Secret token to the file

]

| project Version, toreal(Build);

OSBuilds

NOTE: The Blob SAS token does not include the “?” at the start which is required. This is why I split the Blob SAS URL field instead of just using the Blob SAS token for the secret token.

Below is a subset of the results of the final query pulling back data from the contents of the file in the blob.

Graphic 10: Querying Data in a log analytics query from contents of a file stored in a blob

NOTE: For this blog post I have not provided the actual values for the Blob SAS token and the Blob SAS URL as I replaced a few characters in the string so nobody tries to query my sample data source.

Additional reference:

- Exploring a data set with Kusto: https://vincentlauzon.com/2020/03/11/exploring-a-data-set-with-kusto

- Docs team article on reading from blob storage: https://docs.microsoft.com/en-us/azure/role-based-access-control/built-in-roles#storage-blob-data-reader

Summary: By using the “externaldata” piece of the Kusto query language, we can reach out and grab data from storage such as a file stored in a blob. The key to this was to first get a file into blob storage, then get the SAS and then use the SAS contents (configured with the ? in front of the secret token) to query the data. Finally, remember that the secret does expire so you will need to update the key occasionally or set the expiration date for a long time in the future.

In the next part of this blog series, we will see how to query Azure Metrics as part of a Log Analytics query!

;)