Why do semantics in AI matter?

Semantic meaning ensures that the search engine understands the context and intent behind user queries (prompts) rather than just matching keywords. This allows for more accurate and relevant search results, enhancing the user experience.

By combining semantic understanding with advanced indexing techniques, AI NLP search systems can deliver precise and contextually appropriate results. This synergy between semantic meaning and indexing is what drives the effectiveness and efficiency of modern AI search technologies.

Let’s take a high-level look at the process:

- Tokenization: Break down the text into tokens.

- Embeddings: Convert tokens into vectors using an embedding model.

- Vectorization: Represent entire documents or text chunks as vectors.

- Indexing: Organize and store vectors in a vector database.

- Semantic Search: Retrieve relevant vectors based on query similarity.

- RAG (Retrieval-Augmented Generation): Use retrieved information to augment generative model responses.

The diagram illustrates a user request being vectorized and sent to the vector database to perform a similarity search. Additionally, the organization’s documents, shown on the right, will be used to enhance the LLM response.

Breaking Down the Process

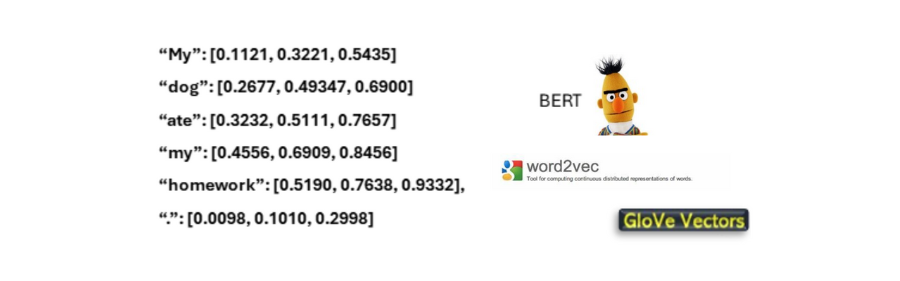

How is semantic meaning applied to this prompt? “My dog ate my homework.”

1. Tokenization

This is the process of breaking down text into smaller units, such as words, phrases, or even characters. In natural language processing (NLP), tokenization is a crucial step because it converts a continuous stream of text into discrete elements that can be analyzed and processed by algorithms. For example, the sentence “My dog ate my homework.” can be tokenized into:

[“My”: 20, “dog”: 21, “ate”: 22, “my”: 23, “homework”: 24, “.”: 25]

2. Embeddings

These are numerical representations of text that capture the semantic meaning of words or phrases. These representations are typically high-dimensional vectors that encode the relationships between different pieces of text. Embeddings allow AI models to understand and compare the meanings of words in a more nuanced way than simple keyword matching. Popular techniques for generating embeddings include Word2Vec, GloVe, and BERT.

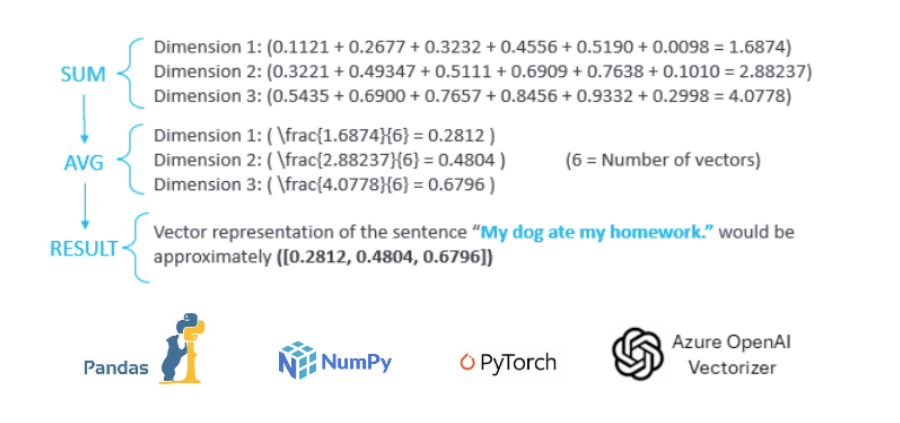

3. Vectorization

The process of converting text data into numerical vectors. This step is essential for machine learning and NLP tasks because algorithms operate on numerical data. Vectorization can be done using various methods, including one-hot encoding, TF-IDF (Term Frequency-Inverse Document Frequency), and embeddings. The resulting vectors can then be used for tasks such as classification, clustering, and similarity analysis.

4. Indexing

Involves organizing and storing data in a way that allows for efficient retrieval and search operations. In the context of AI and NLP, indexing often refers to creating an index of vectors or embeddings that represent text data. This index enables fast and accurate search operations, such as finding documents or sentences that are similar to a given query.

5. Semantic Search

Semantic search is a search technique that understands the meaning and context of search queries to provide more relevant and accurate results. Unlike traditional keyword-based search, semantic search uses embeddings and other NLP techniques to interpret the intent behind a query and retrieve information that matches the query’s meaning, even if the exact keywords are not present.

6. Retrieval-Augmented Generation (RAG)

RAG is a method that combines retrieval-based and generation-based approaches to improve the accuracy and relevance of AI-generated responses. In RAG, relevant information is first retrieved from a database or document repository, and this information is then used to augment the response generated by a language model. This approach ensures that the generated responses are both contextually accurate and informative.

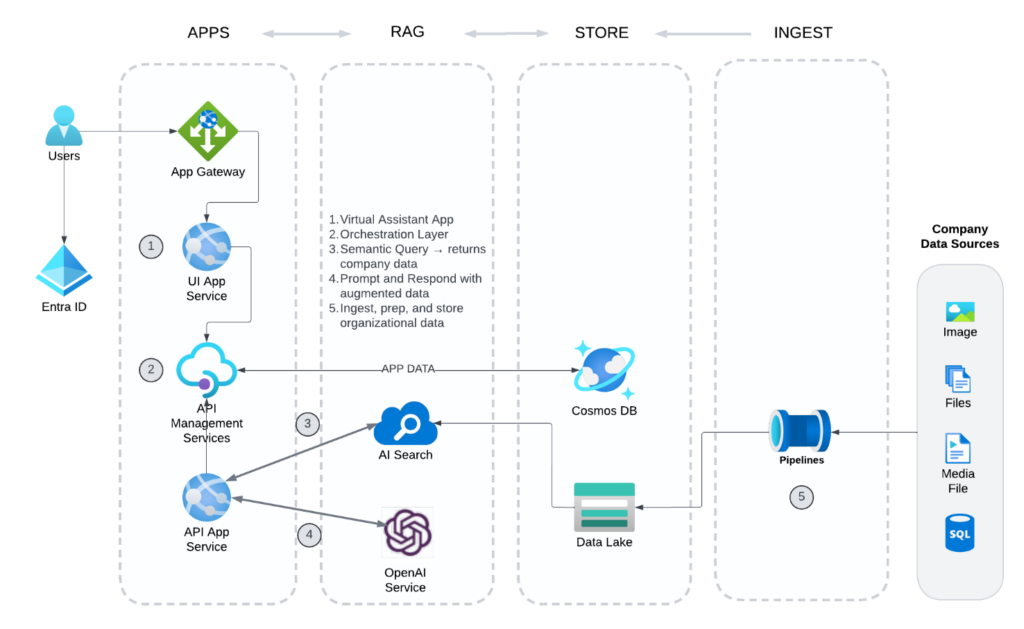

The illustration below showcases a general-purpose Azure RAG implementation, where organizational data and documents are integrated for AI-driven reasoning. Azure OpenAI and Azure AI Search are utilized to generate semantic meaning and prepare the data for consumption in the RAG architecture.

Conclusion

This effective blend of semantic meaning and advanced indexing not only boosts search accuracy but also greatly enhances user satisfaction by delivering results that are both relevant and insightful. As AI search technologies advance, the integration of semantic understanding and sophisticated indexing will continue to lead the way, driving innovation and setting new benchmarks for search performance.

Organizations should harness advancements in AI, RAG, and data vectorization to enhance their search capabilities, integrate their data with AI, and remain competitive in a constantly evolving digital landscape.

;)